Image Based Visual Servoing using RTT 1.0

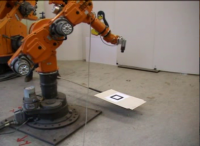

At KULeuven we succesfully succeeded in doing some real experiments showing image-based visual servoing. Every part of the application is created with RTTs TaskContext. The setup consists of a hacked industrial Kuka-361 robot, a firewire camera attached to the robots end-effector and a Pentium IV PC.

Reused OCL-components

We use the existing hardware/camera components to get the images of the camera. The existing hardware/kuka components sends the control outputs to the robot-hardware. Reporting components put our results in a file for post-processing in Matlab. The motioncontrol/naxes and cartesian components allow us to initialize the robot for the visual servoing control.

New components

A new taskcontext allows us to initialize the visual servoing by selecting the target in the image, this component runs in soft realtime. Another component does all the image-processing and calculates the control output in hard-realtime. A third taskcontext shows the resulting images in soft realtime so we can supervise the visual servoing.

Some numbers

The camera captures color images of 640x480 pixels at a framerate of 60 fps. This is the actual bottleneck for the control loop which runs therefor at 60 Hz in hard realtime. The component talking to the robot hardware runs at 500Hz in hard realtime.

Some pictures and movies

A selected target for Image Based Visual Servoing: can be anything with good trackable features

A selected target for Image Based Visual Servoing: can be anything with good trackable features

Movie of the tracking

Movie of the tracking

Slow motion

What the reason you're moving so slow if the control loop runs at 60Hz? Is it just precaution?

Low stability of visual feedback

Since the measurements (the tracked points in the image) are quite noisy a low feedback gain has to be used to get a stable control. Also the visual feedback is only locally stable, it can not deal with big errors in the image. Summing this up results in a slow allowed motion. Maybe a kalman filter can be used to estimate the motion and get some noise out of the control loop.